Hand Gesture Recognition:-

Hand Gesture Recognition includes different components of visual actions such as the motion of hands, facial expression, and torso, to convey meaning. So far, in the field of gesture recognition, most previous works have focused on the manual component of gestures.

A hand gesture recognition system provides a natural, innovative, and modern way of nonverbal communication. It has a wide area of application in human-computer interaction and sign language

Early Stopping:-

EarlyStopping(monitor=’val_loss’,patience=10),

ModelCheckpoint(filepath=”model.h5″,monitor=’val_loss’,save_best_only=True,verbose=1)]

- It is used to stop the model training in between. This function is very helpful when your models get overfitted.

- It is used to stop the model as soon as it gets overfitted.

- We defined what to monitor while saving the model checkpoints.

- We also need to define the factor we want to monitor while using the early stopping function.

- We will monitor validation loss for stopping the model training. Use the below code to use the early stopping function.

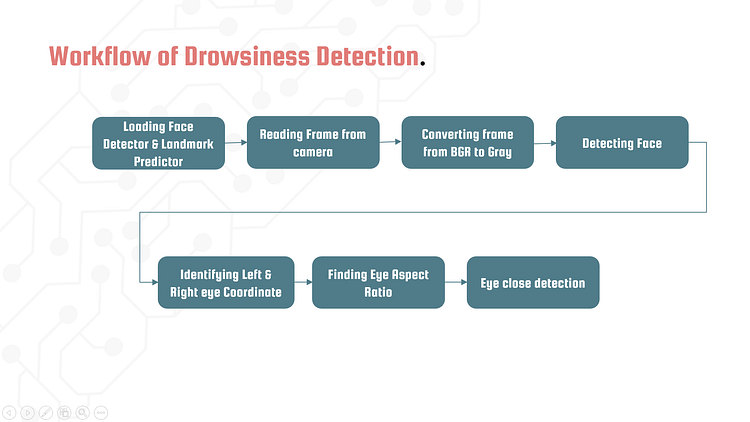

Block Diagram Or Workflow:-

Overview

The ability to perceive the shape and motion of hands can be a vital component in improving the user experience across a variety of technological domains and platforms. For example, it can form the basis for sign language understanding and hand gesture control, and can also enable the overlay of digital content and information on top of the physical world in augmented reality. While coming naturally to people, robust real-time hand perception is a decidedly challenging computer vision task, as hands often occlude themselves or each other (e.g. finger/palm occlusions and handshakes) and lack high contrast patterns.

MediaPipe Hand is a high-fidelity hand and finger tracking solution. It employs machine learning (ML) to infer 21 3D landmarks of a hand from just a single frame. Whereas current state-of-the-art approaches rely primarily on powerful desktop environments for inference, our method achieves real-time performance on a mobile phone, and even scales to multiple hands. We hope that providing this hand perception functionality to the wider research and development community will result in an emergence of creative use cases, stimulating new applications and new research avenues.

Source Code:-

from keras.preprocessing.image import ImageDataGenerator

from keras.layers import Conv2D

from keras.layers import MaxPooling2D

from keras.layers import Dropout

from keras.layers import Dense

from keras.layers import Flatten

from keras.callbacks import EarlyStopping, ModelCheckpoint

from keras.models import Sequential

model = Sequential()

model.add(Conv2D(32, (3, 3), input_shape = (256, 256, 1), activation = 'relu'))

model.add(MaxPooling2D(pool_size = (2, 2)))

model.add(Conv2D(64, (3, 3), activation = 'relu'))

model.add(Conv2D(64, (3, 3), activation = 'relu'))

model.add(MaxPooling2D(pool_size = (2, 2)))

model.add(Conv2D(128, (3, 3), activation = 'relu'))

model.add(MaxPooling2D(pool_size = (2, 2)))

model.add(Conv2D(256, (3, 3), activation = 'relu'))

model.add(MaxPooling2D(pool_size = (2, 2)))

model.add(Flatten())

model.add(Dense(units = 150, activation = 'relu'))

model.add(Dropout(0.25))

model.add(Dense(units = 6, activation = 'softmax'))

model.compile(optimizer = 'adam', loss = 'categorical_crossentropy', metrics = ['accuracy'])

train_datagen = ImageDataGenerator(rescale = 1./255,

rotation_range = 12.,

width_shift_range = 0.2,

height_shift_range = 0.2,

zoom_range=0.15,

horizontal_flip = True)

val_datagen = ImageDataGenerator(rescale = 1./255)

training_set = train_datagen.flow_from_directory('Dataset/train',

target_size = (256, 256),

color_mode = 'grayscale',

batch_size = 8,

classes = ['NONE','ONE','TWO','THREE','FOUR','FIVE'],

class_mode = 'categorical')

val_set = val_datagen.flow_from_directory('Dataset/val',

target_size = (256, 256),

color_mode='grayscale',

batch_size = 8,

classes=['NONE', 'ONE', 'TWO', 'THREE', 'FOUR', 'FIVE'],

class_mode='categorical')

callback_list = [

EarlyStopping(monitor='val_loss',patience=10),

ModelCheckpoint(filepath="model.h5",monitor='val_loss',save_best_only=True,verbose=1)]

model.fit_generator(training_set,

steps_per_epoch = 37,

epochs = 25,

validation_data = val_set,

validation_steps = 7,

callbacks=callback_list

)